Photonics Computing: Silicon's Luminous Challenger

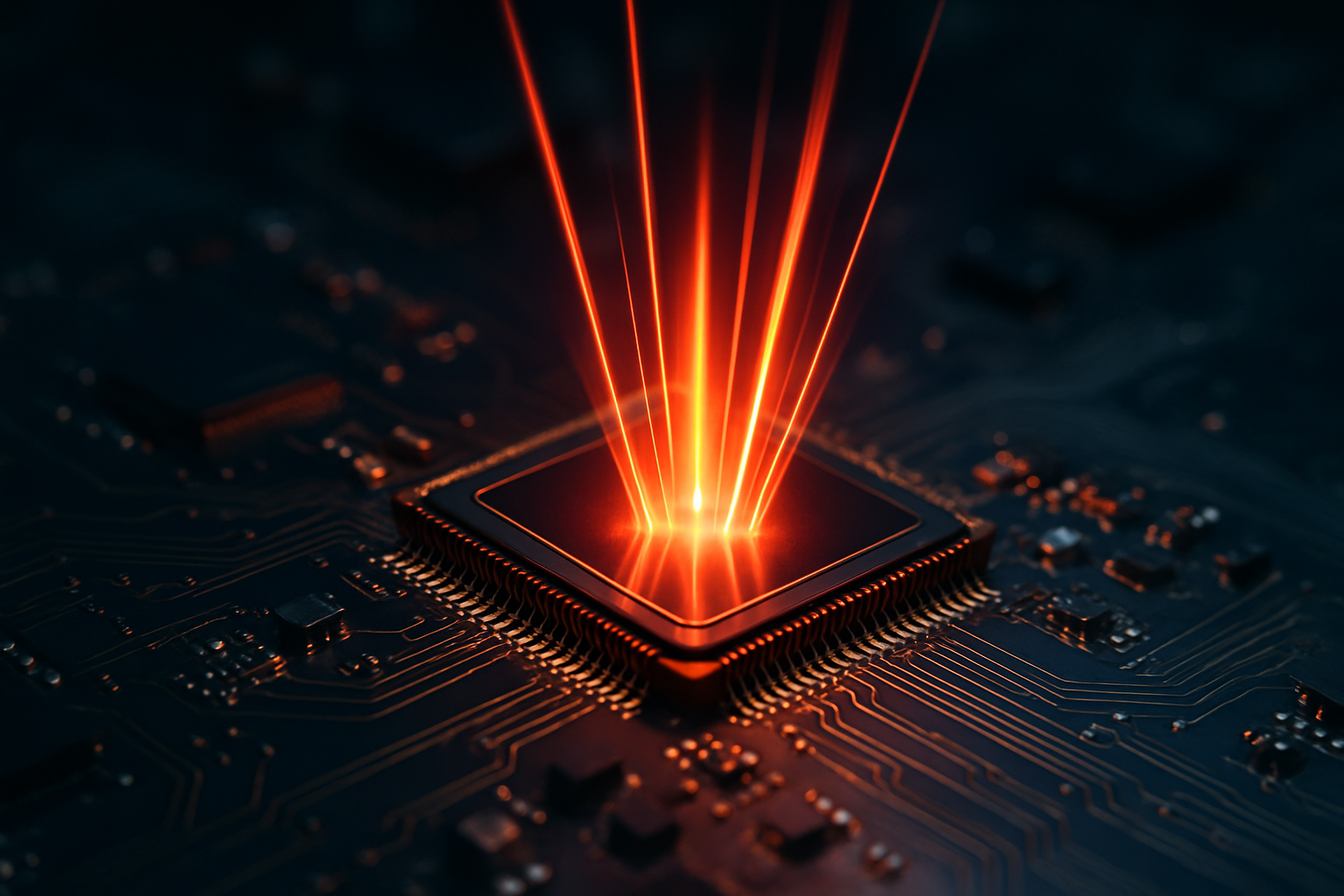

The next wave of computing isn't about making transistors smaller—it's about replacing electricity with light entirely. As traditional silicon approaches its physical limits, major tech players are quietly betting billions on photonic chips that process information using photons instead of electrons. This paradigm shift promises computers that run cooler, faster, and more efficiently than anything currently possible. Yet despite its immense potential, photonics computing remains largely unfamiliar to the broader tech community—until now.

The fundamental physics driving a computing revolution

Traditional computing faces an increasingly stubborn enemy: heat. As we’ve packed more transistors onto silicon chips following Moore’s Law, we’ve hit thermal walls that no clever engineering can fully overcome. The problem is fundamental—moving electrons through semiconductors generates heat through resistance, and that heat has to go somewhere. Today’s high-performance computers require elaborate cooling solutions that consume enormous energy, with data centers alone accounting for approximately 2% of global electricity consumption.

Photonics computing elegantly sidesteps this problem. Light particles—photons—can travel through waveguides with virtually no resistance and minimal heat generation. They can also cross paths without interfering with each other, enabling complex operations impossible with traditional electronics. Most impressively, photonic systems can perform certain calculations at the literal speed of light, offering theoretical performance improvements measured not in percentage points but orders of magnitude.

From theoretical concept to silicon reality

The idea of optical computing isn’t new—researchers have explored the concept since the 1960s. What’s changed is our ability to manufacture the necessary components at scale. Recent breakthroughs in silicon photonics allow for the integration of optical components directly onto standard silicon wafers using modified versions of existing semiconductor fabrication techniques.

This manufacturing compatibility has attracted serious interest from industry heavyweights. Intel has invested over $300 million in photonics research, while startups like Lightmatter and Luminous Computing have secured nine-figure funding rounds from venture capitalists betting on photonics as computing’s next frontier. Even cloud giants like Google and Microsoft have publicly acknowledged internal research programs exploring photonic systems for accelerating specific workloads.

The most immediate applications aren’t complete photonic computers but specialized accelerators targeting AI operations. Matrix multiplications—the mathematical foundation of modern machine learning—can be performed with remarkable efficiency using optical systems. Early photonic neural network processors have demonstrated 10-100x improvements in energy efficiency compared to traditional GPUs and TPUs for certain operations.

The hybrid approach gaining traction

Rather than attempting a wholesale replacement of electronic computers, the industry is converging on hybrid architectures that combine photonic and electronic components. These systems use photonics for the operations where it excels—data movement and certain calculations—while leveraging traditional electronics for control logic and memory storage where photonic approaches remain impractical.

Ayar Labs, one of the sector’s best-funded startups, exemplifies this approach. Its optical I/O solution replaces traditional copper interconnects between chips with optical connections, promising 10x bandwidth improvements and 5x energy reductions. Their technology is being incorporated into Intel’s latest packaging platforms and has caught the attention of the Department of Defense, which sees its potential for next-generation supercomputing systems.

The hybrid approach makes commercial sense. It allows for incremental adoption of photonic technology without requiring an immediate and risky reinvention of the entire computing stack. Industry analysts expect photonic interconnects to be the first widespread commercial application, followed by accelerators for specific workloads, with fully photonic computers potentially a decade or more away.

Technical challenges that remain unsolved

Despite the enthusiasm and progress, significant obstacles remain. Chief among them is developing practical optical memory that can store photons for extended periods. Unlike electrons, photons don’t naturally stay put—they move at light speed or get absorbed. Current solutions involve converting photons to electronic signals for storage and back again, introducing inefficiencies that undermine some of photonics’ core advantages.

Manufacturing consistency presents another challenge. Photonic components require extreme precision—variations of mere nanometers can dramatically affect performance. While modern semiconductor fabrication is impressive, building optical waveguides, modulators, and detectors at scale with consistent performance remains difficult and expensive.

Perhaps the most fundamental challenge is the software ecosystem. Decades of development have optimized programming languages, compilers, and algorithms for electronic computers. Photonic systems operate differently, potentially requiring new programming paradigms and compiler technologies. Companies like Lightmatter have begun developing specialized software stacks, but this remains early-stage work.

The market reality and timeline

Analysts at IDC project the market for silicon photonics components to reach approximately $3 billion by 2025, representing significant growth but still a fraction of the broader semiconductor industry. The technology will likely see adoption in stages, beginning with data center interconnects, followed by specialized AI accelerators, and eventually expanding to broader computing applications.

Price points remain a significant factor. Early photonic interconnect solutions cost substantially more than their electronic counterparts, though proponents argue the performance benefits justify the premium. As with most semiconductor technologies, costs should decline as volumes increase and manufacturing processes mature. Lightmatter’s Envise photonic AI accelerator, announced last year, is expected to command pricing comparable to high-end GPUs—approximately $10,000-20,000 per unit—when it becomes widely available.

For consumers, the benefits of photonics will likely arrive indirectly at first. Cloud services powered by photonic data centers could offer faster performance and lower costs before photonic components make their way into personal devices. When they do reach consumer hardware, expect them first in specialized applications like augmented reality headsets, where their power efficiency and processing speed offer compelling advantages.

While photonics won’t replace traditional computing overnight, its trajectory appears increasingly clear. The question isn’t if light will play a crucial role in future computing architectures, but when and how extensively. As silicon approaches its physical limits, photons offer the next natural step in computing’s evolution—one that might finally break us free from the heating and performance constraints that have increasingly defined the industry’s challenges.