Neuromorphic Computing: Silicon Brains That Mimic Our Own

The human brain remains nature's most efficient computing machine – a 20-watt powerhouse of parallel processing that puts even our best supercomputers to shame. Now, a revolutionary computing approach is gaining momentum in research labs worldwide: neuromorphic computing, which designs hardware to mimic the brain's neural architecture. This transformative technology could fundamentally change how our devices learn, adapt, and process information, bringing us closer to creating truly intelligent machines that operate with remarkable energy efficiency. The implications span from smarter smartphones to autonomous vehicles that learn like humans do – not through brute-force calculations, but through adaptive neural networks built directly into silicon.

Beyond von Neumann: Computing’s Biological Inspiration

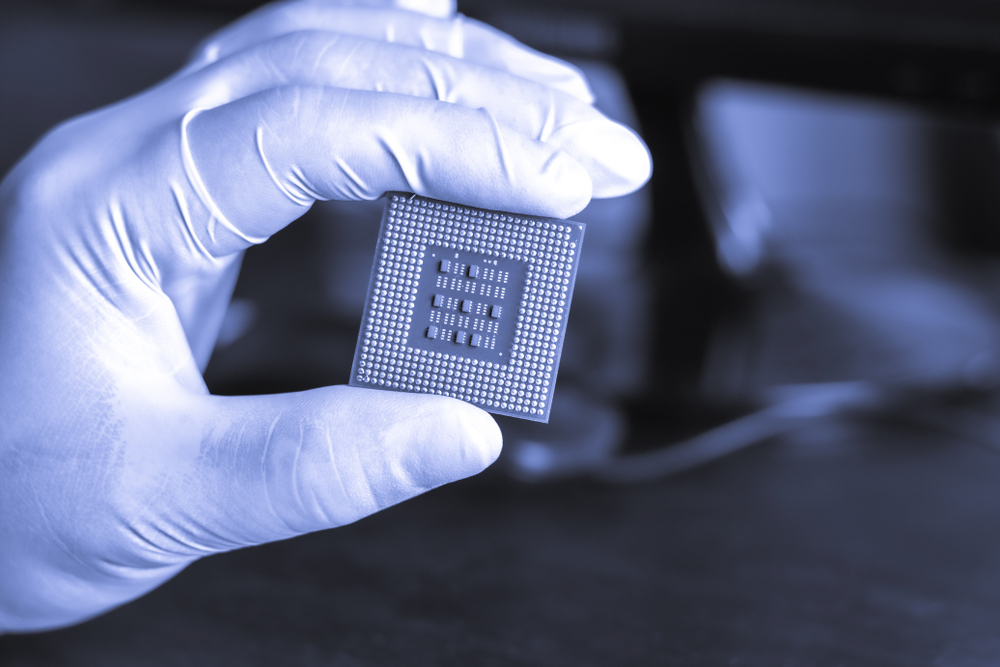

Today’s computers operate on an architecture conceived by John von Neumann in the 1940s, with separate processing and memory units that communicate through a bus. This design creates a fundamental bottleneck: data must constantly shuttle between memory and processor, limiting efficiency and consuming significant power. Neuromorphic systems take a radically different approach by embedding memory and processing together in artificial neurons and synapses that communicate asynchronously – just like our brains.

Unlike conventional computers that rely on clock-synchronized operations, neuromorphic chips operate through “spiking neural networks” where information flows only when needed. Intel’s Loihi chip and IBM’s TrueNorth are pioneering examples, featuring millions of silicon neurons that communicate through electrical pulses. These chips achieve stunning efficiency gains – performing certain AI tasks while consuming mere watts instead of the hundreds of watts required by conventional hardware.

Memristors: The Key to Artificial Synapses

Central to neuromorphic computing are memristors – circuit elements whose resistance changes based on the history of current that’s flowed through them. First theorized in 1971 but only physically demonstrated in 2008 by researchers at HP Labs, memristors function remarkably like biological synapses, storing information in their physical state.

Unlike conventional memory that loses information when powered off, memristors maintain their state without power, creating persistent memory that blends seamlessly with processing. This property enables neuromorphic systems to learn continuously from experiences, strengthening connections between artificial neurons based on usage patterns – mirroring how human brains develop neural pathways.

Recent breakthroughs at the University of Massachusetts Amherst have produced memristors from protein nanowires, potentially allowing for biodegradable neuromorphic components. Meanwhile, researchers at Stanford have developed analog memristor arrays that can be trained to recognize handwritten digits with accuracy rivaling traditional digital approaches while using a fraction of the energy.

Real-Time Learning Without the Cloud

One of the most promising applications of neuromorphic computing is enabling edge devices to learn independently without constantly communicating with cloud servers. Current AI systems typically require massive data centers for training, with only the finished models deployed to devices. Neuromorphic chips could change this paradigm entirely.

Consider an autonomous drone equipped with a neuromorphic processor. Rather than relying on pre-programmed responses or cloud connections, it could learn to navigate new environments in real-time, adapting to changing conditions much like a bird does. This continuous learning happens with minimal power consumption – critical for battery-powered devices.

The market implications are substantial. According to research firm Gartner, edge AI processing is projected to reach $15.7 billion by 2025, with neuromorphic approaches potentially capturing a significant portion of this growing segment. Companies like BrainChip have already commercialized neuromorphic solutions, with their Akida processor finding applications in automotive systems and security cameras that can identify unusual activities without cloud connectivity.

Solving Computing’s Energy Crisis

Perhaps the most compelling argument for neuromorphic computing is energy efficiency. The human brain performs remarkable feats of pattern recognition and analysis while consuming roughly the same power as a light bulb. Modern data centers, by contrast, can consume energy equivalent to small cities.

This efficiency gap becomes increasingly problematic as computing demands grow. Training a single large AI model can generate carbon emissions equivalent to five cars over their lifetimes. Neuromorphic approaches offer a potential solution, with IBM’s TrueNorth chip demonstrating image recognition tasks while consuming just 70 milliwatts – hundreds of times more efficient than conventional hardware performing similar tasks.

Researchers at the University of California, San Diego recently demonstrated a neuromorphic system that can perform complex voice recognition tasks while consuming just 2 milliwatts of power. At scale, such efficiency improvements could dramatically reduce the environmental impact of AI and extend the battery life of smart devices from hours to days or even weeks.

Challenges on the Path to Silicon Neurons

Despite promising advances, significant hurdles remain before neuromorphic computing enters mainstream use. Programming these systems requires fundamentally different approaches than traditional computing. Developers must think in terms of neural network topologies and spike timing rather than sequential algorithms – a paradigm shift that requires new tools and training.

Materials science challenges also persist. While memristors show promise, scaling their production to commercial levels with consistent performance remains difficult. Hybrid approaches that combine conventional digital elements with neuromorphic components may provide a transitional path forward.

Perhaps most fundamentally, researchers are still discovering the optimal architectures for different applications. Unlike conventional computing with its standardized instruction sets, neuromorphic systems might need specialized designs for different cognitive tasks – much like specialized regions in the human brain.

Nevertheless, investment continues to accelerate. The European Union’s Human Brain Project has allocated over €400 million toward neuromorphic research, while DARPA’s SyNAPSE program has funded significant advances in the field. Major semiconductor companies like Samsung and Qualcomm are also developing neuromorphic technologies, anticipating applications in next-generation mobile devices and IoT systems.

As these investments bear fruit, we may soon find our devices not just calculating, but perceiving, learning, and adapting to the world around them – powered by silicon brains that operate more like our own.